Large Design Model

The Large Design Model is a generative model that creates graphic design layouts using vector objects. It transforms text and images into final consumer products like documents, books, posters, ads, and presentations. Our goal is to replace templates with dynamically generated designs, tailored precisely to the user’s content.

Product

Visual Reasoning

Release

2024

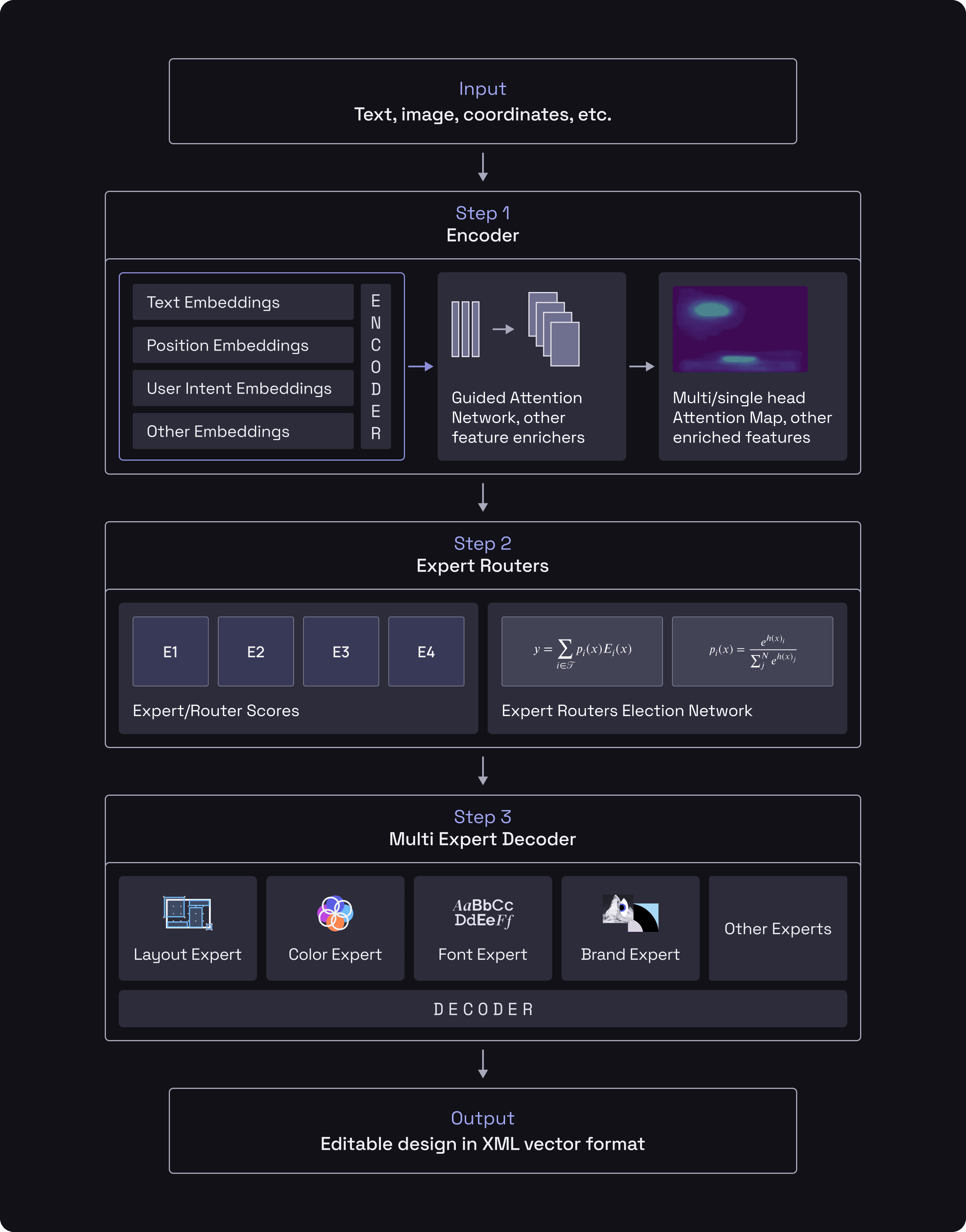

Architecture

Our multimodal neural network, powered by sparse expert models, deconstructs design into its core elements, generating billions of potential combinations of layouts, color palettes, and typography. Unlike diffusion models, LDM operates deterministically and works with vector formats, allowing anyone to design anything in seconds.

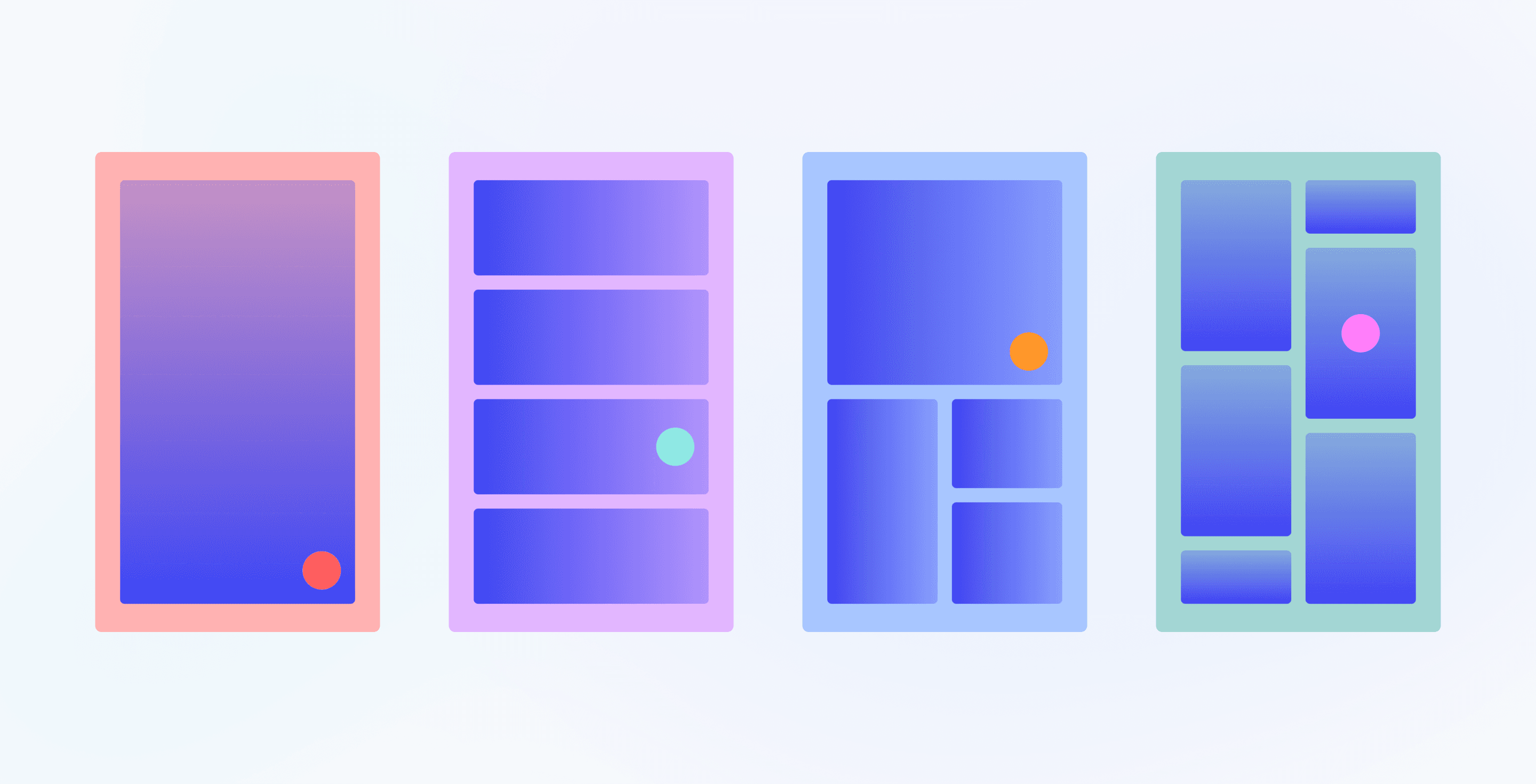

Layout Generation

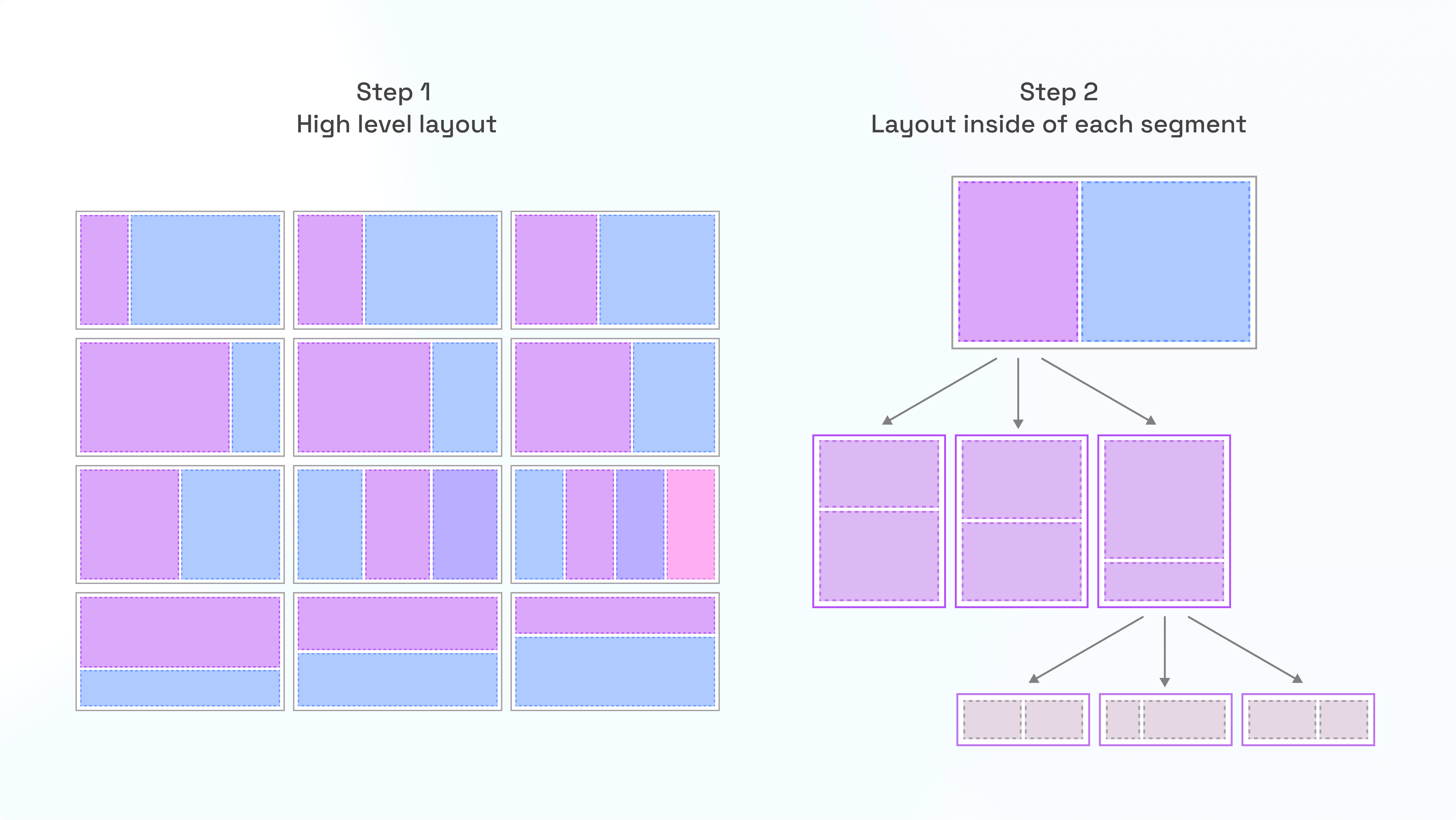

Our Layout Generation Model replaces static templates by dynamically creating layouts tailored to your content. Trained on professional designs, it understands the relationship between content volume and optimal layouts. The model dissects the canvas into nested compositions, enabling complex, multi-level designs that precisely organize content.

Our generative model starts by creating broad layout options, as shown in Step 1.

In Step 2, it refines each segment of the chosen layout, optimizing the arrangement of content through alpha-beta pruning. This ensures the final design is both visually appealing and effective, tailored to the inputted content. The underlying architecture here is NNUE, making our layout generation model closer to chess engines than to diffusion models.

Salience Quality Assurance

Our salience vision model ensures that key elements in a design stand out. It checks each layout to make sure the most important content is clear and prominent, enhancing the design's effectiveness.

Color Reasoning

We have developed an advanced color reasoning model that generates tailored color palettes from raw text input.

Our system employs an LLM to deconstruct the text, identifying key themes and emotional undertones. These insights are then fed into our proprietary color model, which selects colors that best convey the identified themes. Finally, it applies principles of color theory to assemble harmonious palettes.

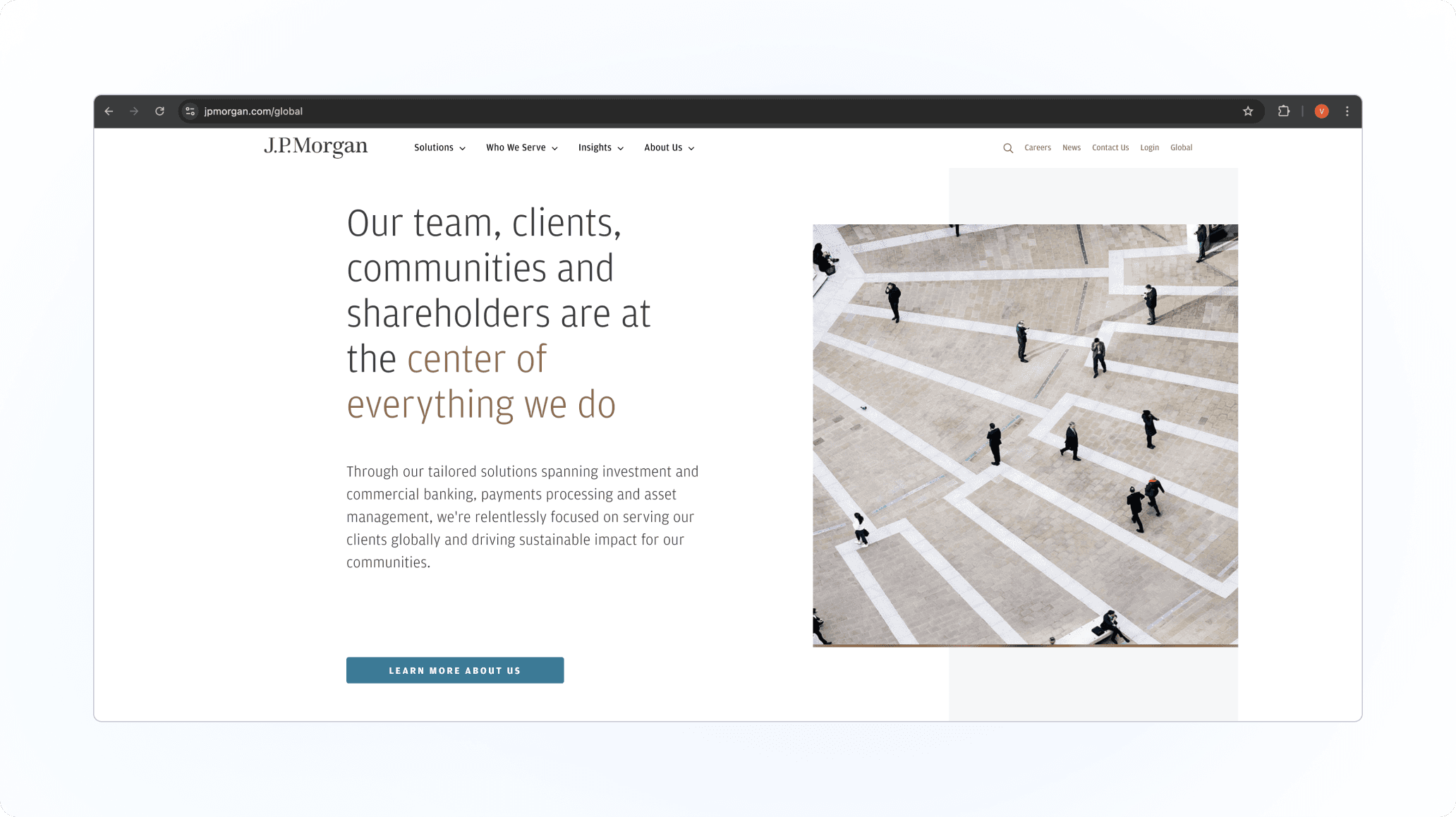

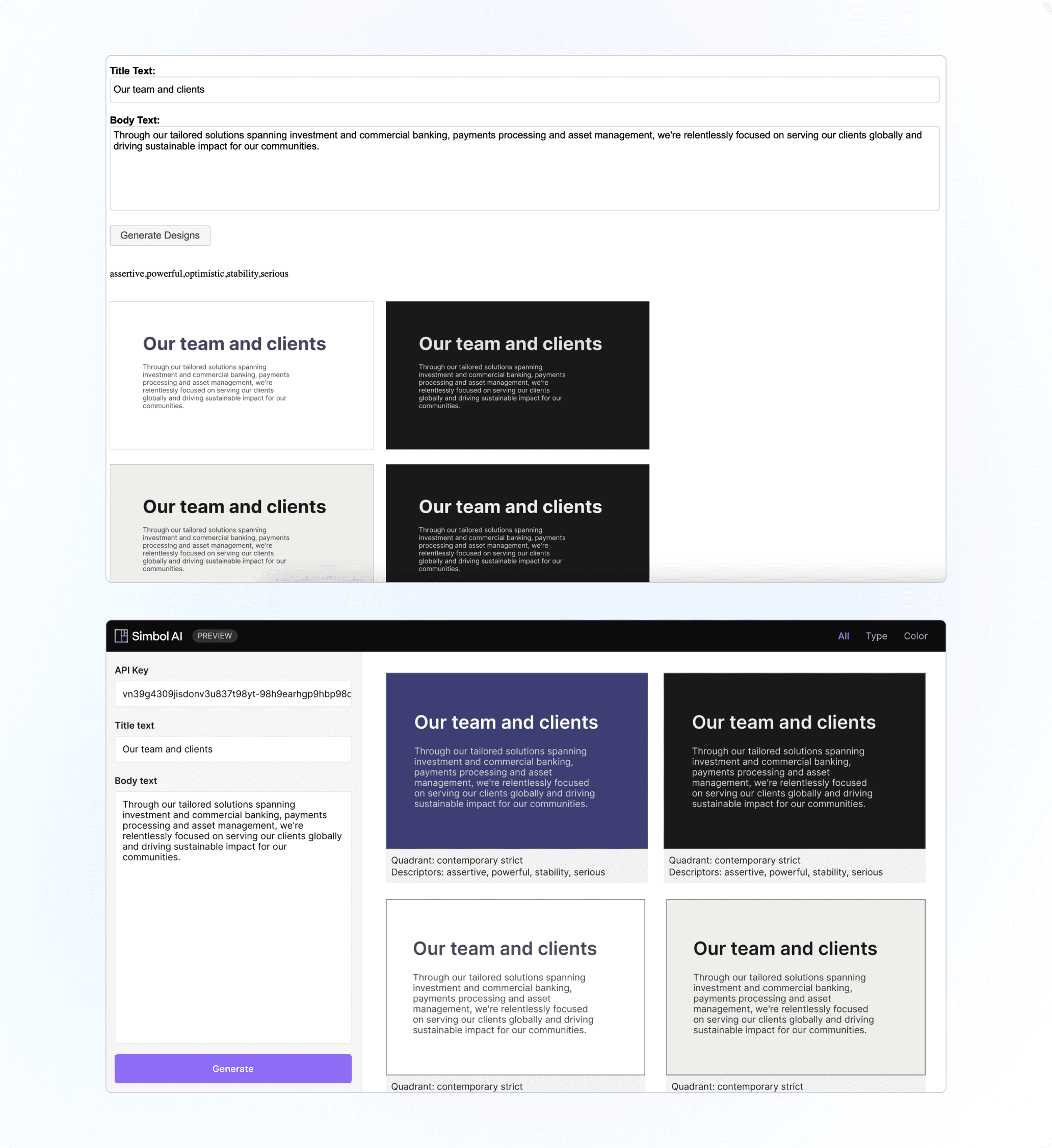

Example

Input:

A text excerpt from J.P. Morgan website.

Output:

Simbol Color Model accurately captures the essence of the content. The color suggestions are very close to colors of J.P. Morgan actual branding.

Color Clustering

We developed a clustering algorithm that maps Pantone colors to associated themes and ideas. Using supervised learning, we trained the model on art and design works, supplemented with synthetic data from diffusion models. This approach allows for precise color-theme reasoning.

Typography Reasoning

Our Typography Reasoning Model evaluates textual input and selects typefaces that reflect the content's theme. We trained it to assess typefaces using aesthetic parameters like tone, age, connotation, contrast, etc. The model suggests typography that fits the input's tone and intent.